What is SCINet?

The SCINet initiative is an effort by the USDA Agricultural Research Service (ARS ⤴) to grow USDA’s research capacity by providing scientists with access to

- high-performance computing clusters [SCINet HPC Systems]

- high-speed networking for data transfer

- training in scientific computing

- virtual research support

Website

An official SCINet website is hosted on the United States government domain at https://scinet.usda.gov ⤴

Use the SCINet website to request SCINet accounts, access user guides, get technical support, and find out about upcoming and previous training events.

This introduction to SCINet features much of its material sourced from the SCINet website.

The SCINet homepage is shown on the screenshoot below.

Cite SCINet

Add the following sentence as an acknowledgment for using CERES as a resource in your manuscripts meant for publication:

“This research used resources provided by the SCINet project of the USDA Agricultural Research Service, ARS project number 0500-00093-001-00-D.”

Before you get started

Before you get started actively using the SCINet resources, perform the A, B, and C steps to familiarize yourself with the SCINet initiative.

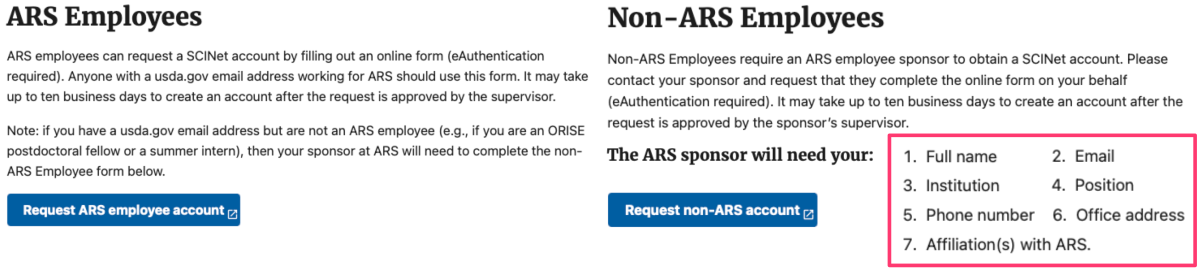

A. Sign up for a SCINet account

A. Sign up for a SCINet account

The SCINet account is required to get an access to the SCINet HPC Systems and specialized content of the SCINet resources, such as trainings, recorded workshops, or SCINet Forum ⤴.

To obtain a SCINet account, a SCINet Account Request must be submitted:

- for ARS-affiliated users: SCINet Account Request ⤴

- for non-ARS users: Non-ARS SCINet Account Request ⤴

The approval process depends on the affiliation of the requester:

Once your request is approved you should get the “Welcome to SCINet” email with further instructions.

…from the official Sign up for a SCINet account ⤴ guide.

B. Read the SCINet Policy

Reading the SCINet Policy before you get started using the SCINet resources is important for several reasons. The SCINet policy is concise and contain important information about how the resource may be used, including any restrictions or limitations on use. This can help you understand what you can and cannot do with the resource. In particular, you can learn about:

C. Know where to find help

| 1. read FAQs ⤴ | 2. read GUIDEs ⤴ |

|---|---|

|

|

| 3. contact VRSC ⤴ | 4. use FORUM ⤴ |

|

|

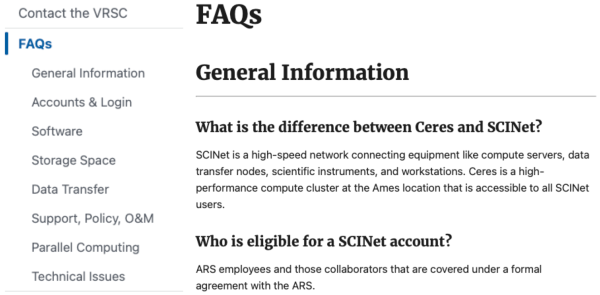

1. read FAQs

It is generally a good idea to browse the Frequently Asked Questions (SCINet FAQ ⤴) section first because it can save you time and effort. The FAQ section is designed to provide answers to common questions that users may have about the SCINet service.

By browsing the FAQ section, you may be able to quickly find the answer to your question without having to contact SCINet support team or search through other parts of the website. Overall, browsing the FAQ section can be a useful first step in getting the information you need and can help you save time and effort in the process.

Quick preview of SCINet FAQ ⤴below:

General Information

What is the difference between Ceres and SCINet? ⤴Who is eligible for a SCINet account? ⤴

How do I find documentation on Ceres and SCINet? ⤴

How much does a Ceres account cost? ⤴

How much does Amazon Web Services (AWS) cost? ⤴

Who manages SCINet? ⤴

Who can I contact for help using SCINet? ⤴

How do I use Basecamp? ⤴

How do I acknowledge SCINet in my publications? ⤴

Accounts & Login

How do I get an account (I am an ARS employee)? ⤴How do I get an account for non-ARS collaborators, students, or postdocs? ⤴

How do I reactivate my account? ⤴

How do I reset or change my password? ⤴

What are the SCINet password requirements? ⤴

How do I login to Ceres? ⤴

I took my onboarding a long time ago, how do I get a refresher course? ⤴

Software

What software is available on SCINet? ⤴How do I request software to be loaded onto Ceres? ⤴

How do I install my own software programs? ⤴

How do I compile software? ⤴

What is Galaxy? ⤴

How do I login to SCINet Galaxy? ⤴

How do I request software to be loaded onto SCINet Galaxy? ⤴

Storage Space

How much data can I store on Ceres? ⤴How do I request an increase in storage space? ⤴

How do I request access to a project directory? ⤴

Data Transfer

How do I get my data onto and off of Ceres? ⤴How do I get my data onto Ceres via SCINet Galaxy? ⤴

Support, Policy, O&M

What is the Virtual Research Support Core (VRSC)? ⤴How do I contact the VRSC for assistance? ⤴

Who is the SCINet program manager? ⤴

What is the Scientific Advisory Committee (SAC)? ⤴

Parallel Computing

How do I write a batch script to submit a compute job? ⤴How do I compile MPI codes? ⤴

Technical Issues

My terminal window keeps freezing. Is there something I can do to stop this? ⤴I log in at the command line but the system keeps logging me out. Is there something I can do to stop this? ⤴

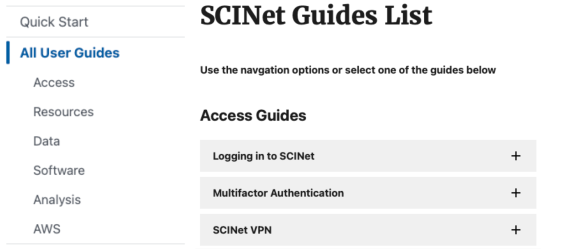

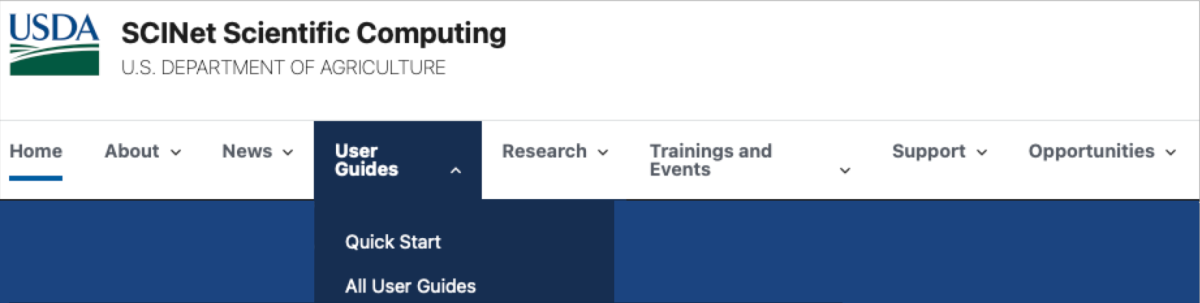

2. read GUIDEs

Reading user guides can be a good starting point to get an overview on how to use the SCINet services. You can easily find the links to the User Guides in the top dropdown menu on the SCINet website ⤴.

- Quick Start ⤴, to getting started with SCINet

- All User Guides ⤴, to open a grouped list of guides in categories:

- Access Guides ⤴ (login, authentication, VPN, command line, Open OnDemand, nomenclature)

- Resources Guides ⤴ (clusters: Ceres & Atlas, cite SCINet, external computing resources)

- Data Guides ⤴ (data & storage, storage quotas, data transfer including cloud resources)

- Software Guides ⤴ (loading modules, loading containers, preinstalled software, user-installed software)

- Analysis Guides ⤴ (CLC server; R, Rstudio, Python, Perl; SMRTLINK/Analysis, Galaxy, Geneious)

- AWS Guides ⤴

3. contact VRSC

If your question is not on the FAQs list or the answer in the guide is not comprehensive, please contact the VRSC support team.

Email is a good way to contact SCINet support team for information or direct help. There are two addresses assigned to different needs:

- scinet_vrsc@usda.gov

- use it for questions or feedback about the website, SCINet newsletter or to contribute content

- to get technical assistance with your SCINet account

- to get broad HPC support from the Virtual Research Support Core ⤴ (VRSC)

- Learn more about How and When to Contact the VRSC ⤴

- scinet-training@usda.gov

- use it for all inquires for help from the SCINet Office

4. use SCINet Forum

Finally, contact other SCINet users on the SCINet Forum ⤴ to get a quick response to your question.

The forum is actively monitored by community members who are willing to help others, so you may be able to get an answer to your question in a short amount of time. Another benefit is that you can get a variety of perspectives on your question. By asking your question on a forum, you can get input from multiple people who may have different experiences and expertise. Finally, the SCINet forum can be a good resource for learning more about a particular topic. By reading through previous discussions and questions on the forum, you may be able to learn more about the issue and get ideas for your own questions.

SCINet HPC System

A High Performance Computing (HPC) system offers a computational environment that can quickly process data and perform intricate computations at high speeds. To learn more, see the .

The SCINet HPC System is three-component, including:

- computing nodes that are connected together and configured to a consistent system environment:

- Ceres cluster ⤴, located in Ames (IA), with more than 9000 compute cores (18000 logical cores) with 110 terabytes (TB) of total RAM, 500TB of total local storage, and 3.7 petabyte (PB) of shared storage.

- Atlas cluster ⤴, located in Starkville (MS), with 8260 processor cores, 101 terabytes of RAM, 8 NVIDIA V100 GPUs, and a Mellanox HDR100 InfiniBand interconnect.

- data storage to manage and store the data and results:

- short-term storage on each computing cluster

- Juno storage ⤴, located in Beltsville (MD), with a large, multi-petabyte ARS long-term storage, periodically backed up to tape device.

- high-speed network that facilitates efficient data transfer across compute nodes and communication between network components

In addition to the Ceres and Atlas clusters, there are external computing resources ⤴ available to the SCINet community, including Amazon Web Services ⤴, XSEDE ⤴, and the Open Science Grid ⤴.

To fully understand the user guides, first familiarize yourself with the SCINet nomenclature ⤴.

Access Guides

Explore comprehensive user guides in category: Access Guides ⤴

Ceres vs. Atlas clusters

General settings

| feature | Ceres | Atlas |

|---|---|---|

| login node | @ceres.scinet.usda.gov | @atlas-login.hpc.msstate.edu |

| transfer node | @ceres-dtn.scinet.usda.gov | @atlas-dtn.hpc.msstate.edu |

| Open OnDemand | https://ceres-ondemand.scinet.usda.gov/ | https://atlas-ood.hpc.msstate.eduu |

| home directory quota | displayed at login ; type my_quotas |

type quota -s |

| project directory quota | displayed at login ; type my_quotas |

default 1TB ; type /apps/bin/reportFSUsage -p proj1,proj2,proj3 |

| preinstalled software |

Software preinstalled on Ceres ⤴ Ceres Container Repository ⤴ |

Singularity container image files in the Ceres Container Repository are synced to Atlas daily. For more information about software on Atlas, see Atlas Documentation ⤴. |

Submitting jobs

| feature | Ceres | Atlas | notes |

|---|---|---|---|

| job scheduler | slurm | slurm | documentation ⤴ ; Useful SLURM Commands ⤴ ; Interactive Mode (use node live) ⤴ ; Batch Mode (submit job) ⤴ |

| default walltime | partition’s max simulation time |

15 min | see partitions row in this table to learn about partition’s max simulation time on Ceres on Atlas max walltime depends on the selected queues |

| default allocation | 2 cores on 1 node | 1 core on 1 node | on Ceres hyper-threading is turned on (the smallest allocation is 2 logical cores) |

| valid account |

required |

required |

To see which accounts ⤴ you are on, along with valid QOS’s for that account, use on Atlas: sacctmgr show associations where user=$USER format=account%20,qos%50 on Ceres: sacctmgr -Pns show user format=account,defaultaccount |

| queues [max_walltime] |

see partitions row | normal [14d] debug [30 min] special priority |

on Atlas, special and priority queues must be requested and approved on Ceres queues are replaced by the functional groups of nodes called partitions |

| cores per node |

72 or 80 or 96 | 48 | see Partitions or Queues ⤴ and Ceres Technical Overview ⤴ to learn details about logical cores per specific groups of nodes on Ceres see Requesting the Proper Number of Nodes and Cores ⤴ |

| partitions [nodes/mem GB/time] |

short [41/384/48h] medium [32/384/7d] long [11/384/21d] long60 [2/384/60d] debug [2/384/1h] mem [4/1250/7d] mem768 [1/768/7d] longmem [1/1250/1000h] + priority nodes* |

atlas [228/384] bigmem [8/1536] gpu [4/384] service [2/192] development [2/768] development-gpu [2/384] |

see Partitions or Queues ⤴ to learn details about *priority nodes on Ceres |

To dive in a more comprehensive description, see detailed SCINet guides about Differences between Ceres and Atlas ⤴, including sections:

Ceres Guides

Ceres login node: @ceres.scinet.usda.gov

Ceres transfer node: @ceres-dtn.scinet.usda.gov

Ceres Getting started tutorial: (in this workbook)

Ceres computing cluster User Guides by SCINet:

- Onboarding Videos ⤴

- Technical Overview ⤴

- see Logging in to SCINet ⤴

- Ceres login node: @ceres.scinet.usda.gov

- Using Linux Command Line Interface ⤴

- Using web-based Open OnDemand Interface ⤴

- Access Ceres OpenOnDemand at https://ceres-ondemand.scinet.usda.gov/

- Explore OOD guides ⤴

- Software on Ceres:

- Software preinstalled on Ceres ⤴

- User-Installed Software on Ceres with Conda ⤴

- learn more from the universal Software guides ⤴ section:

Atlas Guides

Atlas login node: @atlas-login.hpc.msstate.edu

Atlas transfer node: @atlas-dtn.hpc.msstate.edu

Atlas Getting started tutorial: (in this workbook)

Atlas computing cluster User Guides by SCINet:

In the one-page documentation you will find the sections listed below. To navigate to the selected topic, press CTRL + F on your keyboard (on macOS use COMMAND + F), and copy-paste the name of the section.

- Node Specifications

- Accessing Atlas

- File Transfers

- Internet Connectivity

- Modules

- Quota

- Project Space

- Local Scratch Space

- Arbiter

- Slurm

- Available Atlas QOS’s

- Available Atlas Partitions

- Nodesharing

- Job Dependencies and Pipelines

- Atlas Job Script Generator

- Open OnDemand access

- Virtual Desktops

- RStudio Server and Jupyter Notebooks

- see Logging in to SCINet ⤴

- Atlas login node: @atlas-login.hpc.msstate.edu

- Using Linux Command Line Interface ⤴

- Using web-based Open OnDemand Interface ⤴

- Access Atlas OpenOnDemand at https://atlas-ood.hpc.msstate.edu

- Explore OOD guides ⤴

- Software on Atlas:

- learn more from the universal Software guides ⤴ section:

SCINet Data Transfer & Storage

The SCINet HPC infrastructure contains data storage distributed among locations and intended for various purposes:

- Tier 1 Storage on each HPC cluster (Ceres, Atlas), is a local storage for code, data, and intermediate results that are NOT backed up.

- Juno storage, is a multi-petabyte ARS storage device used for long-term storage of data and results and periodically backed up to tape device.

- Tape backup, is an off-site backup of Juno, NOT accessible directly by regular SCINet users.

To learn on how to manage data on SCINet, explore comprehensive user guides offered by SCINet:

Further Reading

SCINet: Atlas computing clusterSCINet: Ceres computing cluster

SCINet: Juno storage

ISU HPC Network

ISU HPC: Condo computing cluster

ISU HPC: Nova computing cluster

ISU HPC: LSS system

Remote Access to HPC resources

Setting up your home directory for data analysis

Software Available on HPC

Introduction to job scheduling

Introduction to GNU parallel

Introduction to containers

MODULE 07: Data Acquisition and Wrangling