Downloading files using wget

Wget, short for “World Wide Web get,” is a command-line utility used to download files from websites or web servers. It supports downloading via HTTP, HTTPS, and FTP protocols and can resume interrupted downloads. This tool is favored for its ability to retrieve files recursively and is commonly used in scripts and automated tasks.

Learning Objective

Upon completion of this section the learner will be able to:

- Utilize wget to download a files

- Download multiple files using regular expressions

- Download an entire website

Here is a generic example of how to use wget to download a file:

wget http://link.edu/filename

And, here you have a few specific examples:

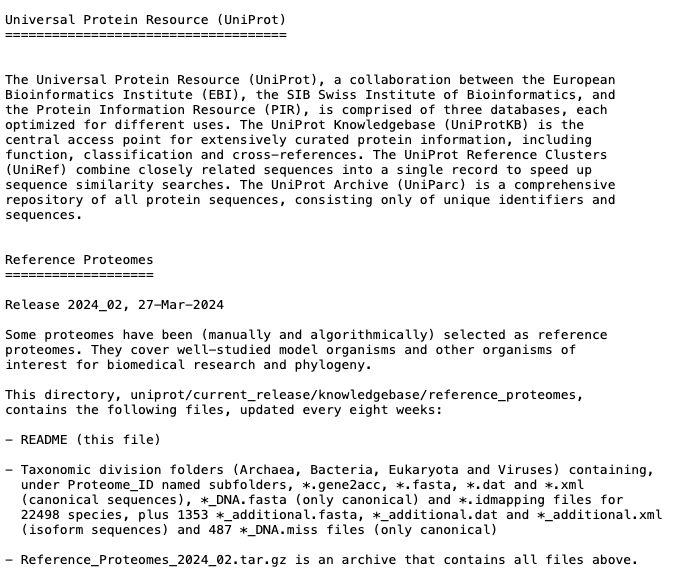

| Photo of a kitten in Rizal Park | Photo of Arabidopsis | text file: UniProt reference_proteomes |

|

|

|

wget https://upload.wikimedia.org/wikipedia/commons/0/06/Kitten_in_Rizal_Park%2C_Manila.jpg

wget https://upload.wikimedia.org/wikipedia/commons/6/6f/Arabidopsis_thaliana.jpg

wget https://ftp.uniprot.org/pub/databases/uniprot/current_release/knowledgebase/reference_proteomes/README

Kitten_in_Rizal_Park,_Manila.jpg 100%[===============================>] 559.14K 1.84MB/s in 0.3s Arabidopsis_thaliana.jpg 100%[===============================>] 39.73K --.-KB/s in 0.07s README 100%[===============================>] 1.61M 3.83MB/s in 0.4s

Sometimes you may find a need to download an entire directory of files and downloading directory using wget is not straightforward.

See section below to learn more.

wget for multiple files and directories

There are two options:

- You can specify a regular expression to filter files by name.

- Alternatively, you can embed a regular expression directly into the URL.

The first option is useful when you have a large number of files in a directory and you want to download only those that match a specific format, such as .fasta files.

For example: to download .tar.gz files starting with “bar” from a specific directory:

wget -r --no-parent -A 'bar.*.tar.gz' http://url/dir/

The second option is beneficial when you need to download files with the same name from different directories:

wget -r --no-parent --accept-regex='/pub/current_fasta/*/dna/*dna.toplevel.fa.gz' ftp://ftp.ensembl.org

This approach preserves the directory structure and prevents files from being overwritten.

For downloading a series of sequentially numbered files, you can use UNIX brace expansion:

wget http://localhost/file_{1..5}.txt

this will download:

# |_ file_1.txt # |_ file_2.txt # |_ file_3.txt # |_ file_4.txt # |_ file_5.txt

To download an entire website (yes, every single file of that domain) use the mirror option:

wget --mirror -p --convert-links -P ./LOCAL-DIR WEBSITE-URL

Other options to consider

| Option | What it does? | Use case |

|---|---|---|

-limit-rate=20k | limits Speed to 20KiB/s | Limit the data rate to avoid impacting other users' accessing the server. |

-spider | check if File Exists | For if you don't want to save a file but just want to know if it still exists. |

-w | wait Seconds | After this flag, add a number of seconds to wait between each request - again, to not overload a server. |

-user= | set Username | Wget will attempt to login using the username provided. |

-password= | use Password | Wget will use this password with your username to authenticate. |

-ftp-user= -ftp-password= | FTP credentials | Wget can login to an FTP server to retrieve files. |

References

Further Reading

Downloading online data using APIDownloading online data using Python-based web scraping

Downloading online repos using GIT: [GitHub, Bitbucket, SourceForge]

Downloading a single folder or file from GitHub

Remote data preview (without downloading)

Viewing text files using UNIX commands

Viewing PDF and PNG files using X11 SSH connection

Viewing graphics in a terminal as the text-based ASCII art

Mounting remote folder on a local machine

Data manipulation

Data wrangling: use ready-made apps

MODULE 08: Data Visualization